If one has been around the Kubernetes space for any significant amount of time, one has probably heard the words, “service mesh” mentioned, or at least some implementation of one such as Istio, Linkered, or Consul. At first, one might wonder why one would need to add something to Kubernetes, an already complex package that can do a lot. While Kubernetes is very capable of running many kinds of workloads, it was built in such a way to make it more general-purpose so it did not assume what kind of workloads should run on it. So, for some more specialized workloads, there is a need to amend Kubernetes with additional functionality. One of those specialized cases is when Kubernetes is used to run microservices, and a service mesh provides this functionality to Kubernetes on top of what Kubernetes already offers.

Because a service mesh is used to managed microservices, it is important to understand a few defining characteristics that qualify a microservice.

- A microservice on a cluster is not defined by a single instance of a microservice, rather it is defined by the type that a single instance embodies. A single service can have multiple instances of that service running.

- Microservices do not maintain state within the services themselves. That does not imply that the state cannot exist, as there may be an external service that is specifically designed to maintain state, such as a caching service.

- Because they are stateless and can support multiple instances, microservices can scale rapidly horizontally, meaning that new instances can rapidly be added or removed depending on the demand for that service.

- Services typically use other services. This means that services call services, which may in turn call other services. These chains of service calls also imply that some services will provide functionality to more than one other service, such as a caching service or email service.

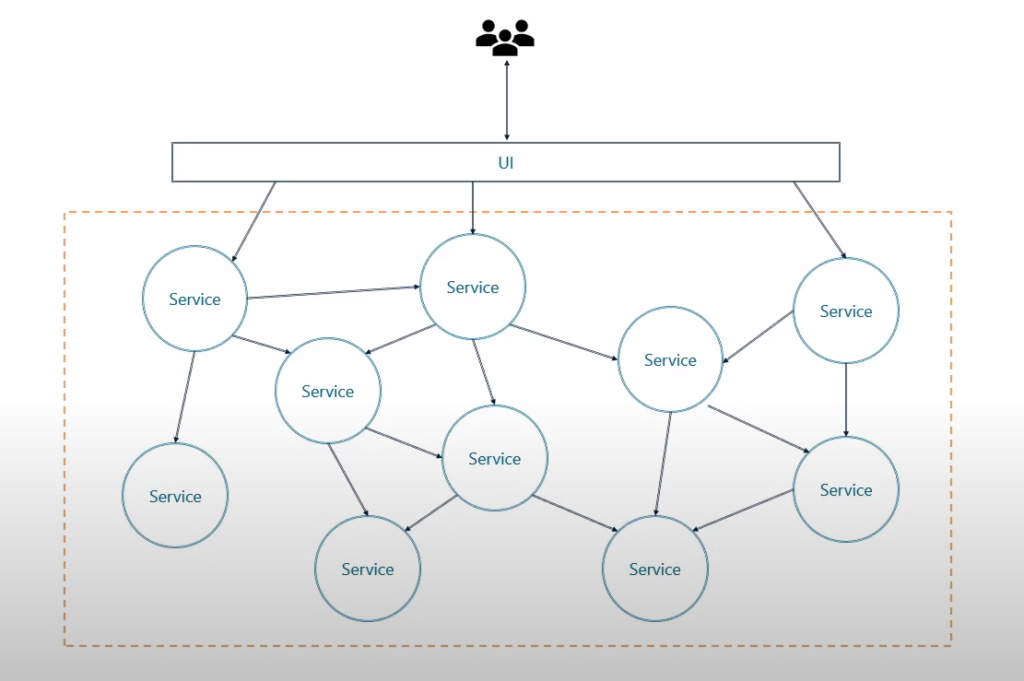

In larger applications, the number of services can grow rapidly such that there may be dozens or perhaps even hundreds of these services running. Moreover, with each service comes multiple instances of that service. And with services calling services, the web of inter-service calls grows exponentially. It is for this reason that the service mesh was created. At its core, a service mesh is an architectural pattern that helps manage the service-to-service communication within a compute cluster. It does this by providing discoverability for service-to-service calls and other value-added features, such as improved security, monitoring, logging, tracing, and reliability.

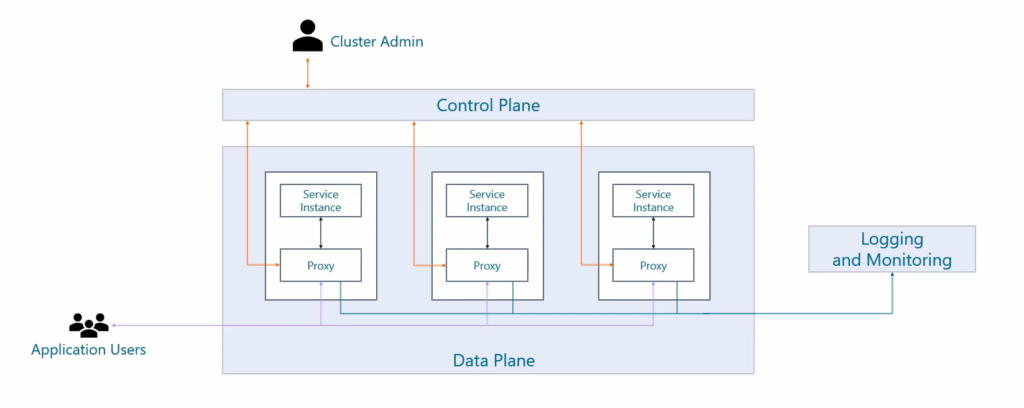

A service mesh typically has two major components: a control plane and a data plane.

The control plane is the part of the service mesh that is responsible for managing the services on the cluster. It provides the resources needed for discoverability as well as the functionality to inject security, monitoring, and reliability features into the services that it is managing. The data plane is where the actual services run. Instances of the services are defined for the compute cluster and the service mesh will wrap these services in such a way so that the value added by the service mesh can automatically be applied. In a Kubernetes context, this is typically done by injecting a sidecar proxy into the pod along with the instance of a service that is running. The sidecar proxy then interacts with the instance of the service while receiving commands from the control plane and reporting telemetry back to the monitoring and logging solution. Ultimately, all the incoming and outgoing requests then flow through a sidecar proxy.

Understanding the fundamental reason why a service mesh was created in the first place helps shape the decision on whether one is needed.

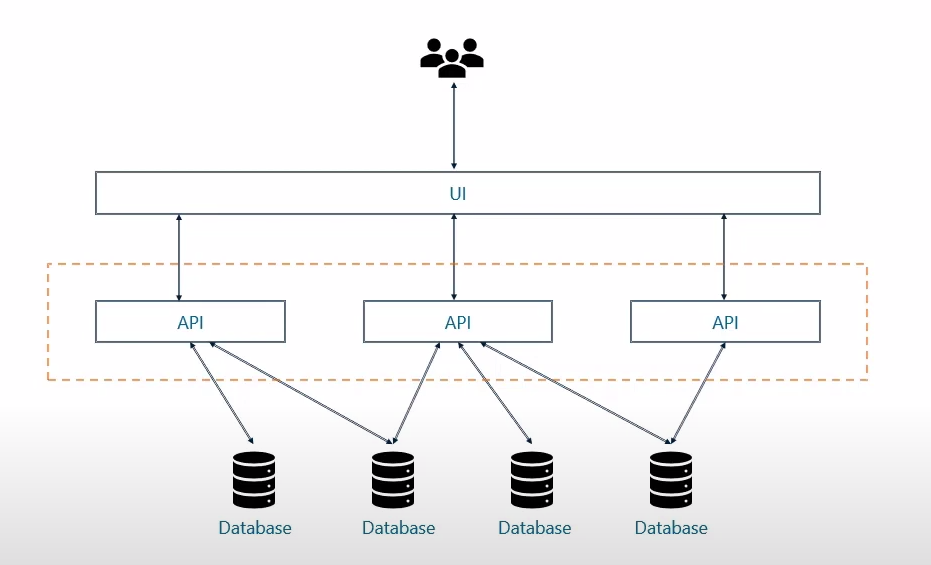

One of the most common approaches to creating microservices is to take and old N-tier application and break its API layer into smaller, discrete, stateless components that can be deployed and scaled independently. Many who go through such an exercise will say that they are creating “microservices” in doing so.

Because they have created microservices, they then think that they automatically need to implement a service mesh because now they have a microservice architecture. While these services might be microservices of a sort, the application they are supporting is still fundamentally an N-tier application. The services are generally speaking one layer deep, calling a database, and rarely if ever calling another service. Under these sorts of scenarios, a service mesh does not make sense, because a service mesh is intended to facilitate service-to-service communications. Without that, the service mesh adds very little value.

On the other hand, many newer greenfield applications do have what one might call a fully realized microservice architecture. With this sort of architecture, there is a suite of services that do call other services, and some of these calls may be many layers deep. This is the ideal scenario for a service mesh.

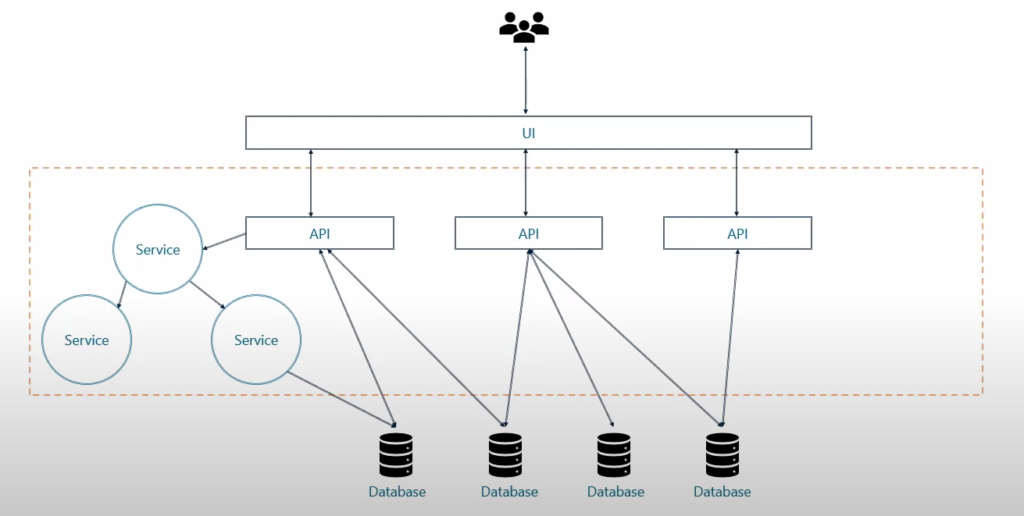

With many applications though, the reality is somewhere between the flat, N-tier application, and the fully realized microservice application. Some of the services, in this case, may be flat and never call another service within the service suite. Other services, however, may call into services that might be multiple layers deep. These sorts of applications have the expressions of both an N-tier application that would not be able to take advantage of a service mesh and a fully realized microservice app that would, so the answer here is not always clear. In most cases, the determinant in such a scenario is going to be dictated by how much of the application is expressed using service-to-service communication. If that is a significant percentage, then a service mesh would be beneficial, even if the entire application is not taking advantage of the service mesh.

Given that a service mesh can help manage microservices, there are a few things that a service mesh will NOT do.

- A service mesh will not decrease an application’s complexity. It many ways, a service mesh adds complexity to an environment by adding more components. The tradeoff though is better visibility, reliability, and security for services.

- A service mesh will not improve application performance because it is injecting additional gateways between each of the calls that are made by services. This adds latency. Moreover, every service instance will have additional compute and memory overhead added to it.

- A service mesh will not reduce management overhead, rather it increases it because, in addition to the application, the service mesh itself must be maintained. If the value added by the service mesh outweighs the additional management, then this trade-off may be worth it.

So what is the ultimate answer? There really isn’t one. But fundamentally one has to ask how much of the application is using service-to-service communication. Should that be significant, the one would do well to weigh it against the costs of implementing a service mesh, then choose a service mesh to service the application.